A mixed methods evaluation involves collecting, analyzing, and integrating data from both quantitative and qualitative sources. Sometimes, I find that while I plan evaluations with mixed methods, I do not think purposely about how or why I am choosing and ordering these methods. Intentionally planning a mixed methods design can help strengthen evaluation practices and the evaluative conclusions reached.

Here are three common mixed methods designs, each with its own purpose. Use these designs when you need to (1) see the whole picture, (2) dive deeper into your data, or (3) know what questions to ask.

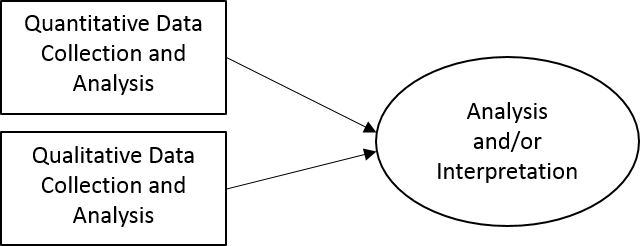

1. When You Need to See the Whole Picture

First, the convergent parallel design allows evaluators to view the same aspect of a project from multiple perspectives, creating a more complete understanding. In this design, quantitative and qualitative data are collected simultaneously and then brought together in the analysis or interpretation stage.

For example, in an evaluation of a project whose goal is to attract underrepresented minorities into STEM careers, a convergent parallel design might include surveys of students asking Likert questions about their future career plans, as well as focus groups to ask questions about their career motivations and aspirations. These data collection activities would occur at the same time. The two sets of data would then come together to inform a final conclusion.

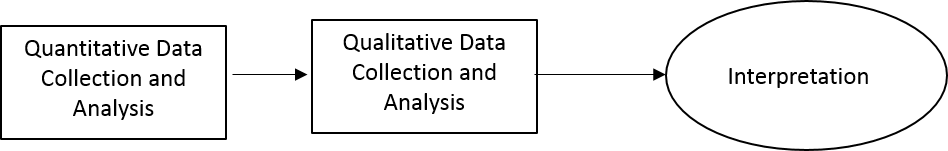

2. When You Need to Dive Deeper into Data

The explanatory sequential design uses qualitative data to further explore quantitative results. Quantitative data is collected and analyzed first. These results are then used to shape instruments and questions for the qualitative phase. Qualitative data is then collected and analyzed in a second phase.

For example, instead of conducting both a survey and focus groups at the same time, the survey would be conducted and results analyzed before the focus group protocol is created. The focus group questions can be designed to enrich understanding of the quantitative results. For example, while the quantitative data might be able to tell evaluators how many Hispanic students are interested in pursuing engineering, the qualitative could follow up on students’ motivations behind these responses.

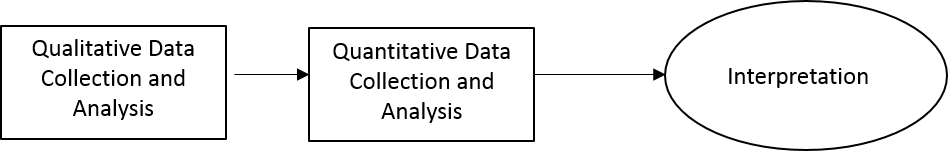

3. When You Need to Know What to Ask

The exploratory sequential design allows an evaluator to investigate a situation more closely before building a measurement tool, giving guidance to what questions to ask, what variables to track, or what outcomes to measure. It begins with qualitative data collection and analysis to investigate unknown aspects of a project. These results are then used to inform quantitative data collection.

If an exploratory sequential design was used to evaluate our hypothetical project, focus groups would first be conducted to explore themes in students’ thinking about STEM careers. After analysis of this data, conclusions would be used to construct a quantitative instrument to measure the prevalence of these discovered themes in the larger student body. The focus group data could also be used to create more meaningful and direct survey questions or response sets.

Intentionally choosing a design that matches the purpose of your evaluation will help strengthen evaluative conclusions. Studying different designs can also generate ideas of different ways to approach different evaluations.

For further information on these designs and more about mixed methods in evaluation, check out these resources:

Creswell, J. W. (2013). What is Mixed Methods Research? (video)

Except where noted, all content on this website is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

EvaluATE is supported by the National Science Foundation under grant number 1841783. Any opinions, findings, and conclusions or recommendations expressed on this site are those of the authors and do not necessarily reflect the views of the National Science Foundation.

EvaluATE is supported by the National Science Foundation under grant number 1841783. Any opinions, findings, and conclusions or recommendations expressed on this site are those of the authors and do not necessarily reflect the views of the National Science Foundation.