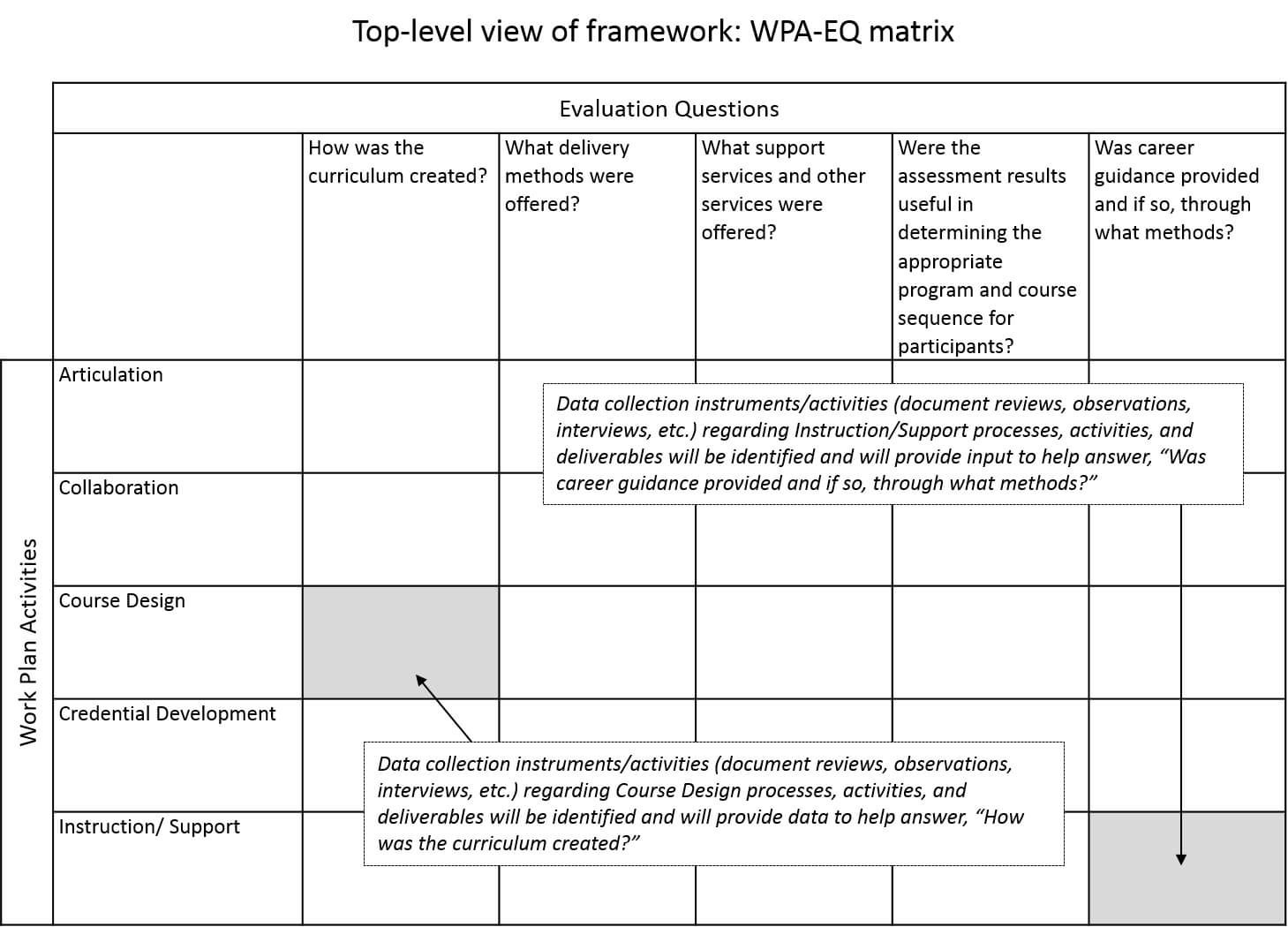

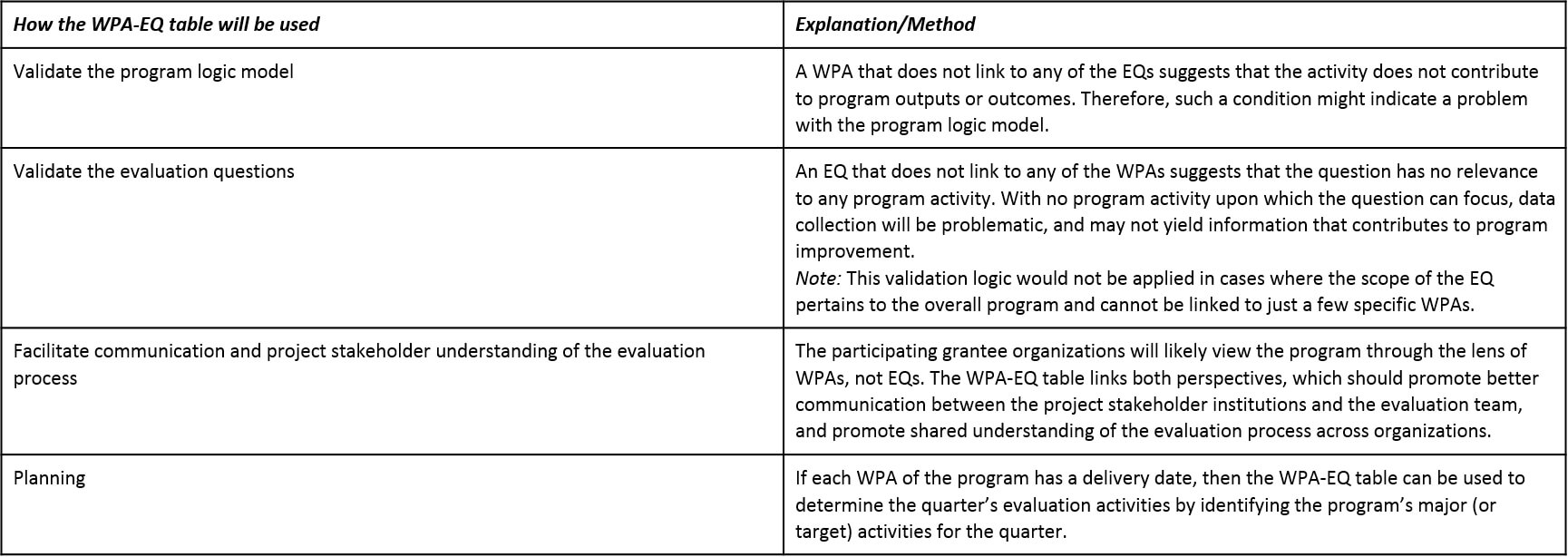

Designing a rigorous and informative evaluation depends on communication with program staff to understand planned activities and how those activities relate to the program sponsor’s objectives and the evaluation questions that reflect those objectives (see white paper related to communication). At NC State Industry Expansion Solutions, we have worked long enough on evaluation projects to know that such communication is not always easy because program staff and the program sponsor often look at the program from two different perspectives: The program staff focus on work plan activities (WPAs), while the program sponsor may be more focused on the evaluation questions (EQs). So, to help facilitate communication at the beginning of the evaluation project and assist in the design and implementation, we developed a simple matrix technique to link the WPAs and the EQs (see below).

For each of the WPAs, we link one or more EQs and indicate what types of data collection events will take place during the evaluation. During project planning and management, the crosswalk of WPAs and EQs will be used to plan out qualitative and quantitative data collection events.

The above framework may be more helpful with the formative assessment (process questions and activities). However, it can also enrich the knowledge gained by the participant outcomes analysis in the summative evaluation in the following ways:

Understanding how the program has been implemented will help determine fidelity to the program as planned, which will help determine the degree to which participant outcomes can be attributed to the program design.

Details on program implementation that are gathered during the formative assessment, when combined with evaluation of participant outcomes, can suggest hypotheses regarding factors that would lead to program success (positive participant outcomes) if the program is continued or replicated.

Details regarding the data collection process that are gathered during the formative assessment will help assess the quality and limitations of the participant outcome data, and the reliability of any conclusions based on that data.

So, for us this matrix approach is a quality-check on our evaluation design that also helps during implementation. Maybe you will find it helpful, too.

Except where noted, all content on this website is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

EvaluATE is supported by the National Science Foundation under grant number 2332143. Any opinions, findings, and conclusions or recommendations expressed on this site are those of the authors and do not necessarily reflect the views of the National Science Foundation.

EvaluATE is supported by the National Science Foundation under grant number 2332143. Any opinions, findings, and conclusions or recommendations expressed on this site are those of the authors and do not necessarily reflect the views of the National Science Foundation.